Total 234 Questions

Last Updated On : 1-Jan-2026

Preparing with Salesforce-MuleSoft-Developer practice test is essential to ensure success on the exam. This Salesforce allows you to familiarize yourself with the Salesforce-MuleSoft-Developer exam questions format and identify your strengths and weaknesses. By practicing thoroughly, you can maximize your chances of passing the Salesforce certification 2025 exam on your first attempt. Surveys from different platforms and user-reported pass rates suggest Salesforce Certified MuleSoft Developer Exam (SP25) practice exam users are ~30-40% more likely to pass.

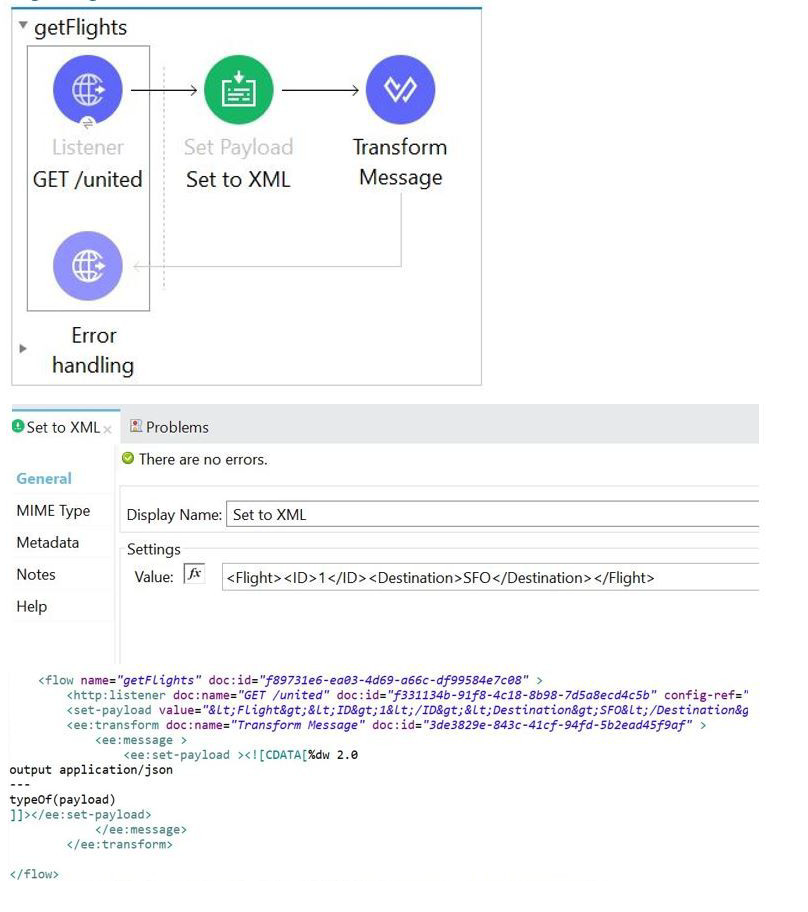

Refer to the exhibits.

A web client submits a request to below flow. What is the output at the end of the flow?

A. String

B. Object

C. Java

D. XML

Explanation:

The flow consists of three main components: a Listener, a Set Payload component, and a Transform Message component.

Listener (GET /united): This component receives the inbound request, but its effect on the payload is minimal in this context. The payload starts empty (null) or based on the inbound request, but the next component immediately overwrites it.

Set Payload (Set to XML): This component sets the payload to a specific XML string value.

Value:

MIME Type: Not explicitly shown, but typically a literal string or XML structure, resulting in a payload of type String (containing the XML content).

Transform Message: This component is the final operation that determines the output data type. The DataWeave script inside the Transform Message is the key:

$$

output application/json

---

typeOf(payload)

Output Directive: The output application/json directive indicates that the final output format of the Transform Message component will be JSON.

DataWeave Body: The body of the script is typeOf(payload). The typeOf() function in DataWeave returns a String representation of the data type of the payload it is inspecting. Since the payload entering the Transform Message is the XML String from the Set Payload component, typeOf(payload) will return the String value "java.lang.String".

Final Output: Although the output is formatted as JSON, the value being outputted is the String literal "java.lang.String". Since DataWeave's output directive converts the output value to the specified MIME type, a simple string output often results in a JSON string, which is still fundamentally a String representation when viewed in the context of the overall output data type. However, more fundamentally, the result of the DataWeave expression itself is a String (the string name of the type), and this is the direct output. Therefore, the output at the end of the flow is a String.

The most precise answer is String, as it's the result of the typeOf() function.

❌ Incorrect Answers

B. Object: The final output is the result of the typeOf() function, which is a String, not a complex Java object (Object).

C. Java: While the data type names in Mule are based on Java types (e.g., java.lang.String), the final output of the flow is the value returned by the DataWeave script, which is a String, not an arbitrary "Java" type.

D. XML: The payload enters the Transform Message as XML content (contained within a string), but the output is set to application/json, and the value generated by the script (typeOf(payload)) is a String literal, not XML.

📚 References

For detailed information on the components used: DataWeave typeOf() function: Refer to the MuleSoft documentation on DataWeave functions, which confirms that typeOf() returns a string with the name of the type.

Mule Flow Execution: Refer to the documentation on how the payload is passed between components and how the final component (Transform Message) dictates the final output structure and type.

A Mule application's HTTP Listener is configured with the HTTP protocol. The HTTP listeners port attribute is configured with a property placeholder named http.port. The mule application sets the http.port property placeholder's value to 9090

The Mule application is deployed to CloudHub without setting any properties in the Runtime manager Properties tab and a log message reports the status of the HTTP listener after the Mule application deployment completes.

After the mule applications is deployed, what information is reported in the worker logs related to the port on which the Mule application's HTTP Listener listens?

A. The HTTP Listener is listening on port 80

B. The HTTP Listener is listening on port 9090

C. The HTTP Listener is listening on port 8081

D. The HTTP Listener failed to bind to the port and is not listening for connections

Explanation:

In the scenario described, the Mule application's HTTP Listener is configured with a property placeholder named http.port, and the Mule application sets this property's value to 9090. When the Mule application is deployed to CloudHub without specifying any properties in the Runtime Manager Properties tab, the application relies on the property value defined within the application itself. Therefore, the HTTP Listener will use the port specified by the http.port property, which is 9090.

CloudHub, MuleSoft’s cloud-based integration platform, does not override application-defined properties unless explicitly configured in the Runtime Manager Properties tab. Since no properties were set in the Runtime Manager, the default behavior is to use the http.port value of 9090 as defined in the application. The worker logs, which report the status of the HTTP Listener after deployment, will confirm that the HTTP Listener is bound to port 9090.

Let’s briefly address why the other options are incorrect:

Option A: The HTTP Listener is listening on port 80

Port 80 is typically the default port for HTTP traffic, but in this case, the application explicitly sets the http.port property to 9090. CloudHub does not automatically revert to port 80 unless explicitly configured, so this option is incorrect.

Option C: The HTTP Listener is listening on port 8081

Port 8081 is a common default port for Mule applications in certain contexts (e.g., on-premises deployments or when using Mule’s default HTTP connector settings without CloudHub). However, CloudHub’s behavior and the explicit configuration of http.port to 9090 override any default port settings, making this option incorrect.

Option D: The HTTP Listener failed to bind to the port and is not listening for connections

There is no indication in the scenario that the port binding failed. Port 9090 is within the valid range for CloudHub applications (ports 1024–65535 are typically allowed for custom configurations), and no conflicting configuration is mentioned. Thus, this option is incorrect.

References:

MuleSoft Documentation: Configuring Properties in Mule explains how property placeholders work in Mule applications and how they are resolved during deployment.

MuleSoft Documentation: Deploying to CloudHub clarifies that properties defined in the application take precedence unless overridden in the Runtime Manager Properties tab.

MuleSoft Documentation: HTTP Connector details the configuration of the HTTP Listener, including the use of property placeholders for ports.

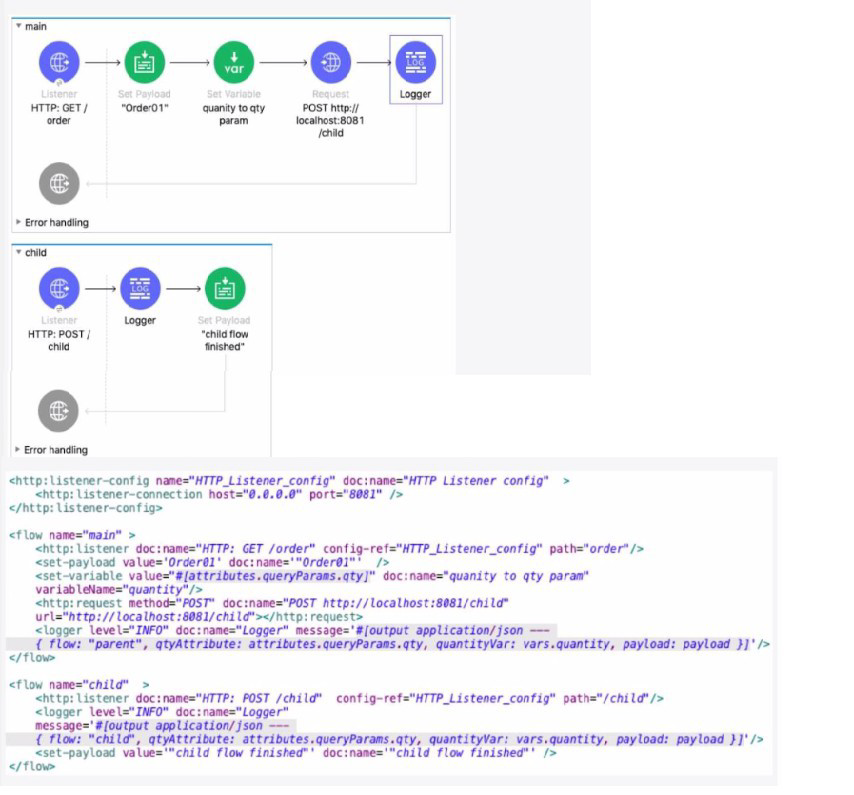

Refer to the exhibits. The main flow contains an HTTP Request operation configured to call the child flow's HTTP Listener.

A web client sends a GET request to the HTTP Listener with the sty query parameter set to 30.

After the HTTP Request operation completes, what parts of the Mule event at the main flow's Logger component are the same as the Mule event that was input to the HTTP Request operation?

A. The payload and all variables

B. All variables

C. The entire Mule event

D. The payload and all attributes

Explanation:

The key concept being tested is how the HTTP Request operation affects the Mule event in the main flow.

1. Initial Mule Event (Before HTTP Request):

Payload: Set to "Order01" by the set-payload component.

Variables: A variable named quantity is created with the value from the query parameter qty (which is 30).

Attributes: Contains the original HTTP request attributes (like query parameters, headers, etc.) from the listener.

2. Behavior of the HTTP Request Operation:

The HTTP Request operation (http:request) is a Message Processor that calls an external resource.

Crucially, when the HTTP Request operation completes, it replaces the entire Mule event in the main flow with the response from the called resource.

This means the original payload, attributes, and variables from before the call are lost and are replaced by the new Mule event from the HTTP response.

3. What Happens in the Child Flow:

The child flow is triggered by the main flow's HTTP Request.

Its initial Mule event is the request from the main flow: payload is "Order01", and it has the quantity variable.

The child flow's logger outputs its own event. It shows that vars.quantity exists (proving variables are passed) and the payload is "Order01".

The child flow's final action is

4. Final Mule Event (After HTTP Request Completes):

The HTTP Request operation in the main flow completes and receives the response from the child flow.

Payload: This is now replaced by the child flow's final payload: "child flow finished". The original payload ("Order01") is gone.

Variables: The original variables (like quantity) are preserved and restored after the HTTP Request operation completes. This is a key feature of Mule 4's non-blocking processing. Variables are safe from being overwritten by external calls.

Attributes: These are replaced by the attributes of the HTTP Response received from the child flow. The original HTTP request attributes (like attributes.queryParams.qty) are lost.

5. Conclusion for the Logger:

After the HTTP Request completes, the main flow's logger will show:

payload: "child flow finished" (changed)

vars.quantity: 30 (same - preserved)

attributes: These are now the HTTP Response attributes, not the original request attributes (changed).

Therefore, the only parts of the original Mule event that are the same (unchanged) after the HTTP Request operation are all variables.

Why the other options are incorrect:

A. The payload and all variables: The payload is changed by the HTTP response.

C. The entire Mule event: The payload and attributes are changed; only the variables remain.

D. The payload and all attributes: Both the payload and attributes are changed by the HTTP response. Variables are the only unchanged part.

Reference:

MuleSoft Documentation: HTTP Request Operation

The documentation explains that the operation returns a response, which becomes the new message payload. It also implicitly covers the scoping behavior where variables are preserved across the request.

An SLA based policy has been enabled in API Manager. What is the next step to configure the API proxy to enforce the new SLA policy?

A. Add new property placeholders and redeploy the API proxy

B. Add new environment variables and restart the API proxy

C. Restart the API proxy to clear the API policy cache

D. Add required headers to the RAML specification and redeploy the new API proxy

Explanation:

When you enable an SLA-based policy in API Manager, the policy requires:

Client ID

Client Secret

SLA Tier (Rate-Limiting Tier)

The API proxy must know where to read these values (usually from inbound headers or query parameters).

To do that, Mule requires property placeholders to be added to the proxy configuration (typically in the mule-artifact.properties or in the API Autodiscovery config).

After updating these properties, the API proxy must be redeployed so that the new configuration takes effect.

👉 Therefore, the correct next step is:

Add property placeholders → Redeploy the API proxy

❌ Why the other options are wrong

B. Add new environment variables and restart the API proxy

Environment variables are not required for SLA policy activation.

Policies are applied at runtime through API Gateway, not through environment variables.

C. Restart the API proxy to clear the API policy cache

A restart alone does not load new policy configuration.

If you haven't added the required placeholders, there is nothing new to load.

Also, API Manager deploys policies dynamically — no restart is needed.

D. Add required headers to the RAML specification and redeploy

SLA enforcement does not require modifying RAML.

Client ID/Secret headers are defined at the API Manager policy level, not the API spec level.

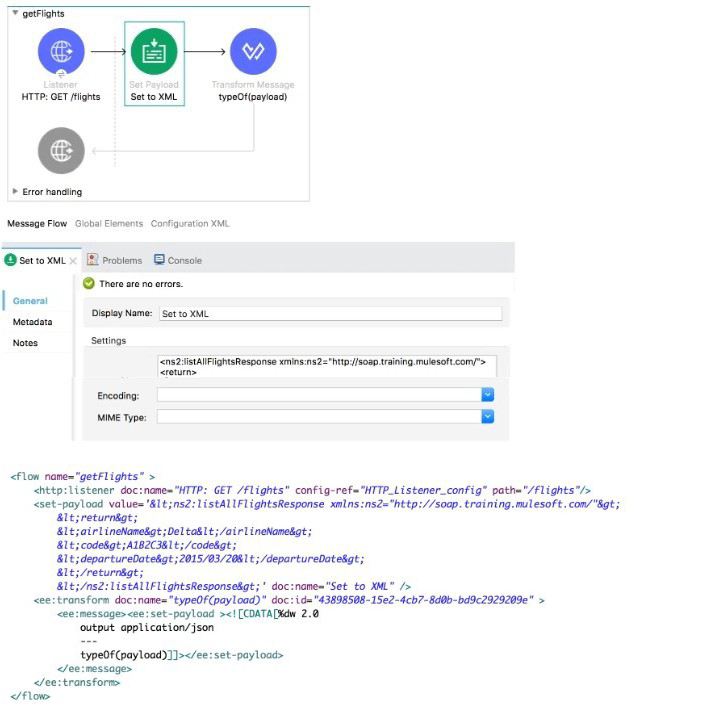

Refer to the exhibits.

A web client submits a request to http://localhQst:8081 /flights. What is the result at the end of the flow?

A. "string"

B. "Java"

C. "object"

D. "XML"

Explanation:

Step 1: Listener

The client sends a request to http://localhost:8081/flights. The flow begins with the HTTP Listener. At this point, there is no payload yet.

Step 2: Set Payload

The “Set to XML” component sets the payload to an XML structure. This XML represents a SOAP-like response (with tags such as

So after this step, the payload type is XML.

Step 3: Transform Message

In the Transform Message, the logic is:

It specifies output application/json, meaning the result will be returned in JSON format.

The body only contains typeOf(payload).

The typeOf() function checks the type of the payload. Since the payload was set to XML in the previous step, the function returns "XML".

Step 4: Evaluate the Options

A. "string" → Incorrect. The payload is not just plain text, it’s structured XML.

B. "Java" → Incorrect. While Mule runs on Java internally, typeOf() does not return "Java".

C. "object" → Incorrect. The payload is not a DataWeave object at this stage.

D. "XML" → Correct. The payload type is XML, so typeOf(payload) returns "XML".

✅ Correct Answer: D. "XML"

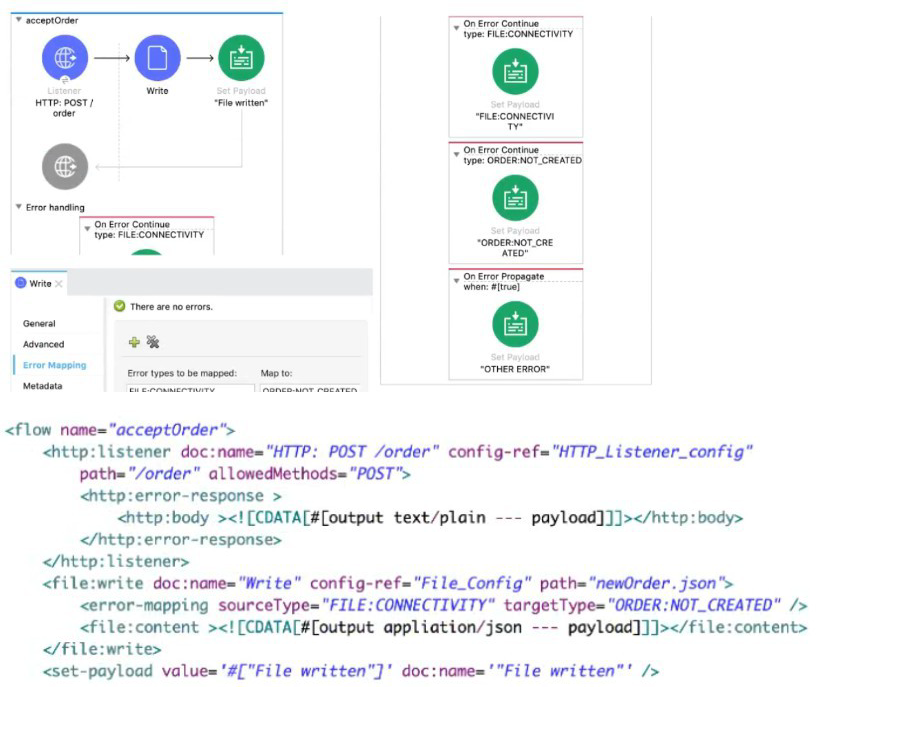

Refer to the exhibits.

A web client sends a POST request with the payload {"oid": "1000", "itemid": "AC200", "qty": "4" } to the Mule application. The File Write operation throws a FILE:CONNECTIVITY error.

What response message is returned to the web client?

A. ‘’FILE:CONNECnvnY'

B. "ORDER:NOT_CREATED"

C. "OTHER ERROR"

D. "File written"

Explanation:

✅ Correct Option

A. "FILE:CONNECTIVITY" 🟢

When the File Write operation fails with a FILE:CONNECTIVITY error, the flow’s error handling section is triggered. The error handler maps this specific error type to a custom response, which is "FILE:CONNECTIVITY". This is then returned to the client as plain text because the HTTP Listener has been set up to output the payload directly as the response body.

❌ Incorrect Options

B. "ORDER:NOT_CREATED" 🔴

Although there is an error mapping from FILE:CONNECTIVITY to ORDER:NOT_CREATED within the File Write component, the outer On Error Continue in the flow takes precedence. This handler directly sets the payload to "FILE:CONNECTIVITY", overriding the mapped type. So "ORDER:NOT_CREATED" is not returned to the client.

C. "OTHER ERROR" 🔴

This message would only appear if the flow entered the On Error Propagate branch. However, that branch only activates for errors that are not specifically handled by the earlier On Error Continue scopes. Since this case is already caught by FILE:CONNECTIVITY, "OTHER ERROR" never executes.

D. "File written" 🔴

This is the normal success message set in the flow when the file is written successfully. But because the File Write operation throws a FILE:CONNECTIVITY error, the success path never executes. Instead, the error handling path takes over, making this option invalid.

📘 Summary

In this scenario, a POST request with order details is received, but the File Write fails due to a connectivity issue. The application’s error handling logic has a specific On Error Continue for FILE:CONNECTIVITY, which sets the response payload to "FILE:CONNECTIVITY". This ensures the client gets an explicit error message rather than a generic or misleading response.

🔗 Reference

MuleSoft Error Handling (On Error Continue and On Error Propagate)

What asset cannot be created using Design Center?

A. Mule Applications

B. API fragments

C. API specifications

D. API portals

Explanation:

Correct Option: ✅ D. API portals

API portals cannot be created in MuleSoft’s Design Center, as it is designed for creating and managing Mule applications, API specifications, and API fragments. API portals, used for publishing and managing APIs for external consumption, are created in Anypoint Exchange or API Manager, not Design Center. This distinction ensures proper separation of design and publishing functionalities within the Anypoint Platform.

Incorrect Options:

❌ A. Mule Applications

Mule applications can be created in Design Center, which provides a web-based flow designer for building and testing integrations. It supports the development of Mule flows and configurations, making this option incorrect, as Design Center is explicitly designed for creating Mule applications alongside other assets like API specifications and fragments.

❌ B. API fragments

API fragments, such as reusable RAML or OAS components, can be created in Design Center. It supports the creation and management of modular API components for reuse across specifications. This capability makes this option incorrect, as Design Center’s functionality includes authoring API fragments to promote consistency and reusability in API development.

❌ C. API specifications

API specifications, such as those in RAML or OAS, can be created in Design Center’s API Designer. It provides tools for designing, mocking, and testing APIs before implementation. This makes the option incorrect, as Design Center is specifically built to support the creation and management of API specifications within the Anypoint Platform.

Summary:

In MuleSoft’s Design Center, users can create Mule applications, API specifications, and API fragments to design and test integrations and APIs. However, API portals, which are used to publish and manage APIs for external users, are created in Anypoint Exchange or API Manager, not Design Center. This separation ensures that Design Center focuses on development, while other platform components handle API publishing and governance.

References:

MuleSoft Documentation: Design Center Overview

MuleSoft Documentation: Anypoint Exchange

MuleSoft Documentation: API Manager

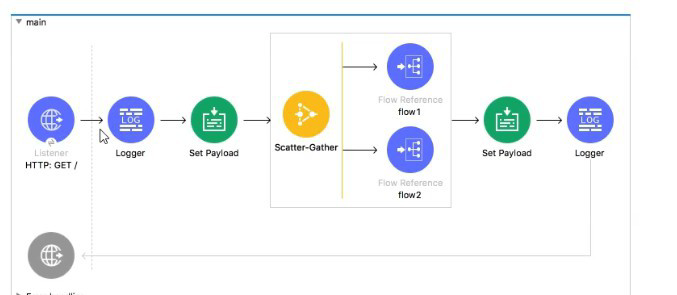

Refer to the exhibit.

In the execution of the Scatter_Gather, the flow1 route completes after 10 seconds and the flow2 route completes after 20 seconds.

How many seconds does it take for the Scatter_Gather to complete?

A. 0

B. 10

C. 20

D. 30

Explanation:

✅ Correct Option:

C. 20

The Scatter-Gather component executes all of its defined routes concurrently. Its completion time is determined by the longest-running route, as it must wait for all routes to finish before aggregating the results and proceeding. In this scenario, flow2 is the slowest route, taking 20 seconds to complete. Therefore, the total time for the Scatter-Gather to finish is 20 seconds, dictated by this slowest route.

❌ Incorrect Options:

A. 0

This is incorrect because Scatter-Gather is a blocking processor. It does not complete instantly; it actively processes the routes and waits for their results. A value of 0 would imply it finished immediately without executing any logic.

B. 10

This is incorrect because it represents the time of the fastest route (flow1). While flow1 finishes at the 10-second mark, the Scatter-Gather must continue to wait for the slower flow2 to complete its 20-second execution before it can finish.

D. 30

This is incorrect because the routes run in parallel, not sequentially. Their execution times are not added together. The total time is the maximum of the individual route times (20 seconds), not the sum of them (10 + 20 = 30).

📋 Summary:

The Scatter-Gather component processes its routes simultaneously. Its total execution time is governed by the duration of the slowest concurrent process. Since flow1 takes 10 seconds and flow2 takes 20 seconds, the component must wait the full 20 seconds for flow2 to finish before it can complete and move to the next processor in the main flow.

🔗 Reference:

MuleSoft Documentation: Scatter-Gather

In an application network. If the implementation but not the interface of a product API changes, what needs to be done to the other APIs that consume the product API?

A. The applications associated with the other APIs must be restarted

B. The applications associated with the other APIs must be recoded

C. The other APIs must be updated to consume the updated product API

D. Nothing needs to be changed in the other APIs or their associated applications

Explanation:

Correct Option:

✅ D. Nothing needs to be changed in the other APIs or their associated applications

This is the fundamental principle of a well-designed, modern API-led application network. The interface of an API acts as a contract 🤝, which defines what the API does, what data it expects, and what data it returns. The implementation is the internal logic of how it achieves this. By keeping the interface stable, you ensure that any consuming APIs that are already built to that contract will continue to work without modification, even if the underlying code or systems change. This decoupling is a major benefit of API-led connectivity, as it allows for independent development and maintenance.

Incorrect Options:

❌ A. The applications associated with the other APIs must be restarted

Restarting applications is only necessary for changes that affect their runtime environment or configuration, such as applying a new policy or updating a property. Since the API's interface (its contract) hasn't changed, the consumer applications aren't aware of any updates and don't need to be restarted. The implementation change is transparent to them.

❌ B. The applications associated with the other APIs must be recoded

Recoding is a drastic measure and would only be required if the API's interface changed in a breaking way (e.g., a change to a URL path, a renamed field, or a different data type). Since the prompt specifies that only the implementation changed, the contract remains valid, and no recoding is necessary. This would be a failure of good API design principles.

❌ C. The other APIs must be updated to consume the updated product API

This option is incorrect because the consuming APIs are already designed to the original interface. A change in the implementation is meant to be transparent to the consumers. Updating them would be a waste of effort and would defeat the purpose of decoupling. An API is treated as a reusable product, and a key benefit is its stability for consumers.

Summary:

The core concept at play here is the separation of API interface (the public contract) and API implementation (the internal logic). In a robust application network, an API's interface serves as a stable contract that consumers can rely on. If a change is made to the implementation—for example, swapping out a database or refactoring the internal code—but the interface (endpoints, parameters, and response formats) remains the same, the change is non-breaking. This means that all other APIs and applications that consume it can continue to operate normally without any changes, restarts, or redeployments, promoting agility and reusability.

As a part of project requirement , you want to build an API for a legacy client. Legacy client can only consume SOAP webservices. Which type the interface documentation can be prepared to meet the requirement?

A. RAML file to define SOAP services

B. WSDL file

C. JSON file

D. plain text file documenting API's

Explanation:

✅ Correct Option

B. WSDL file 🟢

A WSDL (Web Services Description Language) file is the standard way to describe SOAP web services. It defines the operations (methods), request/response structures, and endpoint details in XML format. Legacy clients that consume SOAP services rely on WSDL to understand how to call the API. Therefore, preparing a WSDL file is the correct way to meet the requirement.

❌ Incorrect Options

A. RAML file to define SOAP services 🔴

RAML (RESTful API Modeling Language) is used to document and design REST APIs, not SOAP services. It cannot be used by SOAP clients because SOAP clients require WSDL, not RAML. Choosing this would not make the service consumable by a legacy SOAP client.

C. JSON file 🔴

JSON is a lightweight data-interchange format commonly used in REST APIs. SOAP clients do not understand JSON metadata to discover or consume services. While JSON could describe data, it cannot define SOAP operations or endpoints like WSDL does.

D. plain text file documenting API's 🔴

Although plain text documentation can describe an API in human-readable form, SOAP clients cannot consume it. SOAP requires machine-readable contracts, and only WSDL provides that functionality. Therefore, plain text would not be sufficient.

📘 Summary

To support a legacy client that only consumes SOAP web services, you must prepare a WSDL file. WSDL is the contract that describes SOAP endpoints, operations, and data formats. Other documentation methods like RAML, JSON, or plain text are not suitable because they cannot be parsed or used by SOAP clients.

🔗 Reference

WSDL Introduction – W3C (official)

| Page 1 out of 24 Pages |

Our new timed Salesforce-MuleSoft-Developer practice test mirrors the exact format, number of questions, and time limit of the official exam.

The #1 challenge isn't just knowing the material; it's managing the clock. Our new simulation builds your speed and stamina.

You've studied the concepts. You've learned the material. But are you truly prepared for the pressure of the real Salesforce Certified MuleSoft Developer Exam (SP25) exam?

We've launched a brand-new, timed Salesforce-MuleSoft-Developer practice exam that perfectly mirrors the official exam:

✅ Same Number of Questions

✅ Same Time Limit

✅ Same Exam Feel

✅ Unique Exam Every Time

This isn't just another Salesforce-MuleSoft-Developer practice questions bank. It's your ultimate preparation engine.

Enroll now and gain the unbeatable advantage of: